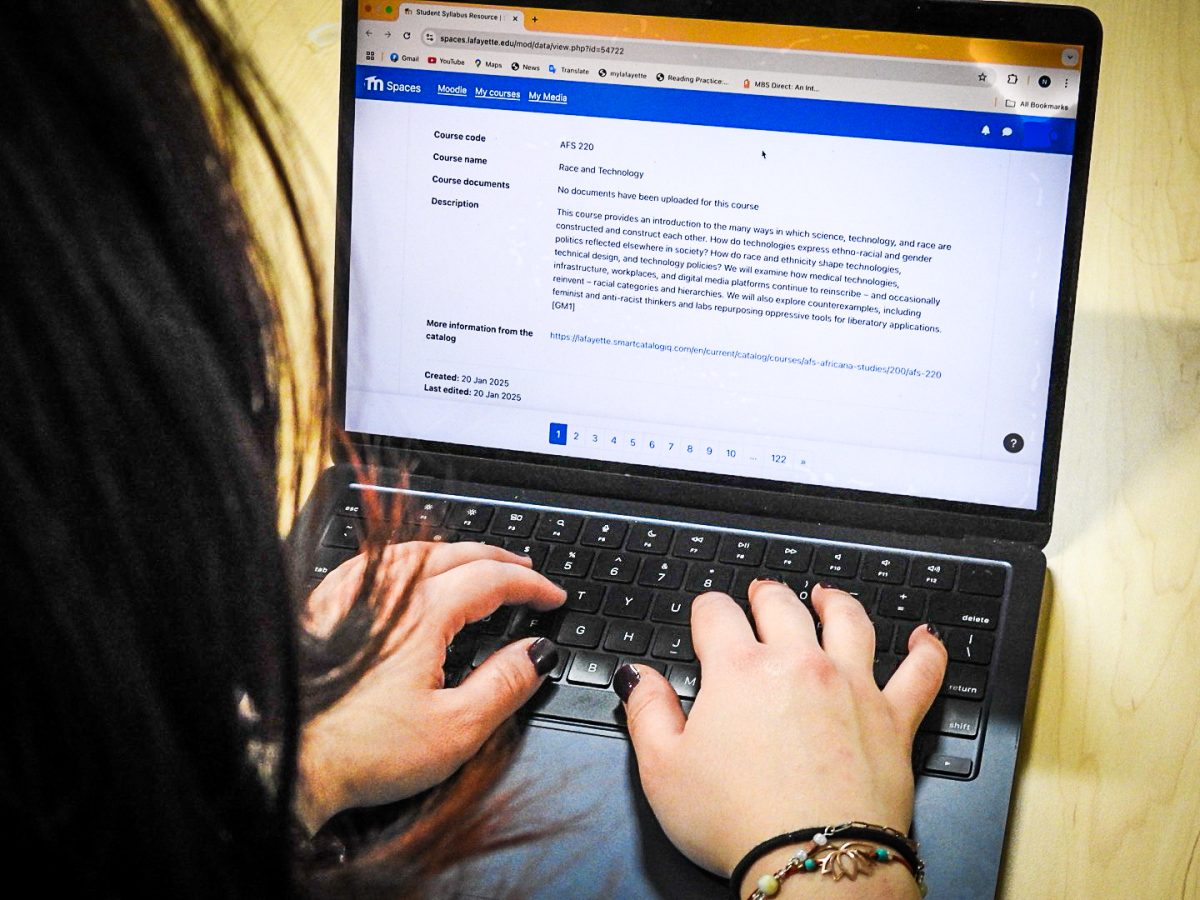

As data becomes an omnipotent force to be used by governments and corporations, the question of who this data benefits has become ever more important. This interaction between data, power and intersectional feminism is the theme of Lauren Klein’s new book “Data Feminism.”

This Monday over Zoom, Klein shared thoughts and insights from “Data Feminism,” which was co-authored by Catherine D’Ignazio. Funded by the Northeast Big Data Information Hub and sponsored by the Data Science Digital Scholarship Initiative, The Hanson Center for Inclusive STEM Education and the Women’s, Gender and Sexuality Studies Department, Klein’s talk marked the beginning of Women’s History Month at the college.

Klein is currently an associate professor in the English and Quantitative Theory and Methods departments at Emory University, as well as the director of the school’s Digital Humanities Lab. The first part of a two-part discussion around Klein’s book will be held on March 16.

According to Klein, data feminism is about much more than just gender. It is about justice, power and challenging the imbalances of power on a broader scale.

“Catherine and I see data feminism as a larger body of work that is together working to hold corporate and government actors accountable for all sorts of racist, sexist, classist data products…You might think of face detection systems that cannot see people of color…Hiring algorithms that demote applicants that went to a women’s school…Child abuse detection algorithms that punish poor parents,” said Klein.

Klein further explained how data, often referred to as “the new oil,” are seen by corporations as an untapped natural resource “that can lead to profit once they are processed and refined.” This way of viewing data can lead to the oppression of women, especially those at the intersection of additional discriminated groups, such as people of color and members of the LGBTQ community.

To further illustrate her point about the suppression of those “who chose to speak truth to power,” Klein referred to the case of Timnit Gebru, the ex-co-lead of Google’s ethical AI team. Gebru, an advocate who asked questions regarding unethical AI at Google, was fired when she demanded greater transparency in the internal peer-review process after her academic paper was retracted.

Referring to the role of feminism in this fight, Klein said, “We’re by no means the first ones to have made the case that this oppression is real, that it’s ongoing, and that it’s really necessary to dismantle. More specifically, feminism and intersectional feminism have been focused on this precise issue of dismantling instances of oppression and the forces of power that produce these instances of oppression.”

“Feminism teaches us to ask questions about power,” she elaborated.

Klein explained that “Data Feminism” takes inspiration from the “long history of feminist activism and critical thought” and applies this thinking to data science in order to understand the wider themes of injustice and oppression.

After her talk on the book, Klein opened the floor to questions.

One participant pointed out that establishing emotion as a norm in the depiction of data can be used as a tool of oppression in the hands of corrupt leaders.

“How do you get around that when trying to make emotion a norm in portraying data?” he asked.

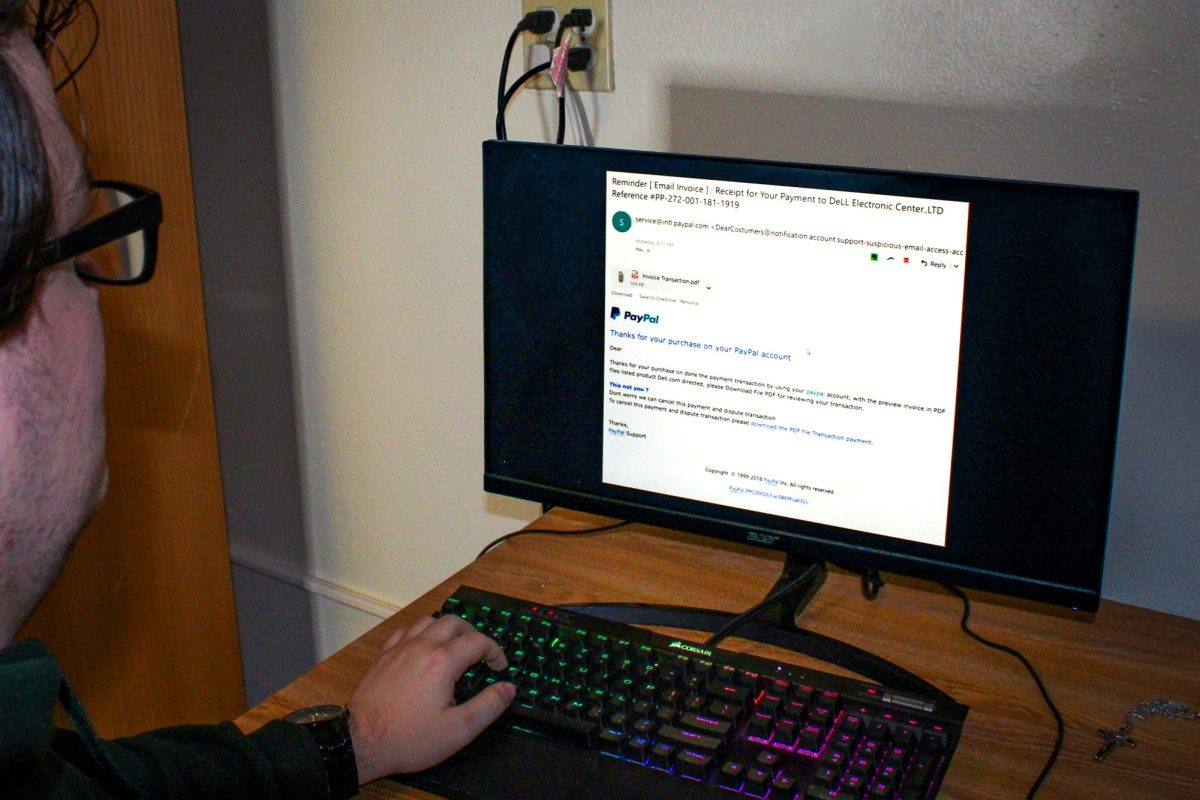

According to Klein, emotion is already in play in scientific-looking images. To illustrate her point, Klein talked about a paper led by an MIT graduate student about misinformation related to COVID-19.

“She discovered that all of these COVID misinformation circulators actually produce their own scientific-looking visualizations with fake data, made-up data, no data, precisely in order to get people to believe that their claims are more scientific than they actually are,” Klein said.

Klein clarified that even though she does not believe all data visualizations need to be emotional, we must be aware of how that data is being interpreted “emotionally and rhetorically.”

“We interpret every image and every image carries with it certain implications, and we don’t think hard enough about what those implications are,” Klein said. “So, the point is, if you really need to dig into the data here, you should think about how it impacts individuals or how the data do not capture every part of whatever experience or situation that we’re trying to report on.”